"Serverless" GPU

Need...more.....power

Bet the title got you excited! Lets cut to the chase. What inspired this project? At Argus we wanted to run our proctoring models on a scheduled basis to create confidence scores for students appearing for our exams. Quickly we stumbled on a roadblock - Sagemaker Serverless Inference doesn't support GPUs yet. Did we absolutely need the GPU for inference? Debatable. A reduced runtime from a GPU instance would certainly save us costs. And so began this plan to create a so called serverless GPU.

The Plan

KISS - Keep It Simple Stupid

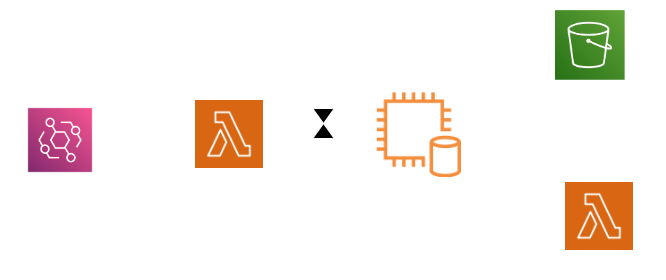

Pretty basic. Use AWS Eventbridge to trigger a lambda that starts the already provisioned and turned off EC2 instance. Once the instance starts, run the script on the EC2 instance using AWS SSM. Once the script runs through, upload a temporary file (could be just dummy data/some metadata about the run etc) to a S3 bucket. This triggers another lambda that basically turns off the EC2 instance. Easy right?

This model extends well too! Say you actually wanted to have an endpoint. Just use lambda behind an API gateway and push the request to SQS while turning on the EC2 instance. When EC2 script polls the queue and finds it empty, write a temporary file which triggers the shutdown lambda.

Specifics

The devil is in the details

The "Startup" Lambda

The Startup Lambda lives for 2 purposes - start the EC2 instance and send the initial commands. For simplicity, we'll skip adding a retry mechanism for now, though it could be a valuable feature for enhancing reliability in the future.

Granting Necessary Permissions

For the lambda to function effectively, it requires specific permissions:

- For EC2 Operations: Attach

ec2:StartInstances,ec2:DescribeInstances, andec2:DescribeInstanceStatusto the Lambda’s role. - For Command Execution with SSM: Include

ssm:SendCommand,ssm:ListCommandInvocations,ssm:ListCommands, andssm:GetCommandInvocationto manage and monitor commands sent via AWS Systems Manager.

With these permissions, the Lambda is all set to manage EC2 instances efficiently, providing a straightforward yet powerful solution for automated management tasks.

The lambda function itself could be as basic as:

import boto3

import os

import time

def lambda_handler(event, context):

ec2 = boto3.client('ec2', region_name=<YOUR AWS REGION>)

ssm_client = boto3.client('ssm', region_name=<YOUR AWS REGION>)

instance_id = <YOUR AWS INSTANCE ID>

try:

ec2.start_instances(InstanceIds=[instance_id])

print(f'Successfully started EC2 instance: {instance_id}')

ec2.get_waiter('instance_running').wait(InstanceIds=[instance_id])

print(f'Instance is now running')

## sleep so that the instance is running by the time you send your commands

time.sleep(30)

response = ssm_client.send_command(

InstanceIds=[instance_id],

DocumentName="AWS-RunShellScript",

Parameters={

"commands": [

<COMMANDS TO RUN THE INFERENCE SCRIPT HERE>

]

},

)

command_id = response['Command']['CommandId']

print(f'Sent SSM command {command_id} to instance {instance_id} to run the script.')

except Exception as e:

print(f'Error starting EC2 instance: {str(e)}')

raise e

The EC2 Instance

To optimize our EC2 instance for its role in inference, it must be preconfigured with the necessary scripts to manage everything from setting up the virtual environment and preparing data to executing inference tasks. A critical function for the instance is to generate a temporary file at the conclusion of its job, whether it’s performing scheduled inference or processing data pulled from an SQS queue until it’s emptied. Naturally, a GPU instance is our go-to choice to harness the needed computational power (quota approval will take a week! So, plan ahead)

Install the SSM agent on the EC2 instance:

sudo yum install -y amazon-ssm-agent

And as part of the EC2 user data, add the following script:

#!/bin/bash

systemctl enable amazon-ssm-agent

systemctl start amazon-ssm-agent

Why not run the inference script itself in the user data script you ask? Its because sending commands via a lambda functions allows us to be more flexible and make the EC2 instance multi-use based on which lambda triggered it.

The "Shutdown" Lambda

Now the trigger lambda has started the EC2 instance and the instance has finished the jobs assigned to it and written a temp file to the S3 bucket. We now need to turn off the instance (otherwise we're still getting billed!). How would we do this?

This is where our shutdown lambda comes in. This is a really simple lambda function. It simply uses s3:ObjectCreated trigger for a special bucket and turns off the EC2 instance we spun up.

Granting necessary permissions

We attach a role that includes ec2:DescribeInstances, ec2:StopInstances and ec2:DescribeInstanceStatus at a minimum. Maybe we want to read some metadata in S3 to figure out which instance to stop?

import boto3

def lambda_handler(event, context):

ec2 = boto3.client('ec2', region_name=<YOUR REGION NAME>)

instance_id = <YOUR INSTANCE ID>

try:

ec2.stop_instances(InstanceIds=[instance_id])

print(f'Successfully stopped EC2 instance: {instance_id}')

except Exception as e:

print(f'Error stopping EC2 instance: {str(e)}')

raise e

Conclusion

Is serverless a misnomer after all?

The limitation of a serverless GPU instance right out of the box is very real. This was simply our attempt at a system which would meet our needs with minimal manual intervention (think what would happen if the job ran forever or the queue never got empty). Is it true serverless? Perhaps no. But we're getting closer to a world with serverless across a wide range of hardware accelerators!